Machine Learning with TensorFlow & scikit-learn

My entry point into coding. These projects explored supervised learning, data preprocessing, and predictive modeling using real-world datasets. I learned to use TensorFlow and scikit-learn to build and train models, evaluate performance, and make predictions. Some of the models I built include linear regression, decision trees, random forests, and neural networks... Ranging from evaluating the risk of heart disease to predicting the price of a house... even classifying dog breeds!

Reddit Sentiment Analysis

This project was conceived when I was still a beginner in the world of coding. I wanted to apply my early understanding of machine learning to build a stock price predictor. At the time, I had no idea what metrics to use - so I turned to sentiment analysis. I figured if the market reacts based on collective expectations and emotions, then analyzing the tone of news articles and social media posts might reveal something useful.

While my ideas and execution weren't perfect, this project introduced me to the world of data analytics, user interfaces, and databases. I even began threading my scrapers together to maximize speed and efficiency. It was a chaotic but exciting beginning.

Article Summarization And Sentiment

This was the first project where I genuinely felt like I was building something meaningful. I had been closely following the evolution of the GPT API and was eager to experiment with its potential. When a relative who runs a YouTube channel mentioned the struggle of finding and condensing news articles, I saw the perfect opportunity to apply what I had learned.

What started as a simple idea quickly evolved into a fully functional article summarization tool. It began with threaded Selenium scripts and later transitioned into a more efficient system using custom web crawlers and spiders. The tool would automatically visit provided news sites, search for specified topics, extract the article content, summarize it using GPT, and display the result in a clean frontend interface - dynamically - all without having to code each website's scraper individually.

It was my first real dive into building an end-to-end pipeline, and it motivated me to keep going deeper.

YouTube Auto Commenting

YouTube Auto Commenting

Something else that was requested of me was that I help manage a Youtube channel. Its said that interacting with the community and driving engagement is key to growing a channel.

Putting my skills to the test I decided to build a bot that would automatically comment on videos based on certain criteria. Using Google's Oauth2 and the Youtube API, I was able to build a bot that would gather a user's posted videos, analyze the context of the video, gather the comments, load a list of AI personalities, generate a comment, and post it.

CCNA Network Tools

After finishing a few projects, I originally set out to explore a career in IT. Along the way, my curiosity pulled me down the rabbit hole of how computers actually communicate with one another. I dove into the fundamentals — learning about ports, local networks, routing, and the countless moving parts that make modern networks function.

Even though I didn’t end up pursuing a career in IT, that deep dive gave me a solid foundation in how the internet really works. That knowledge has stuck with me and even came in handy when I built and launched this website, which now runs smoothly on AWS.

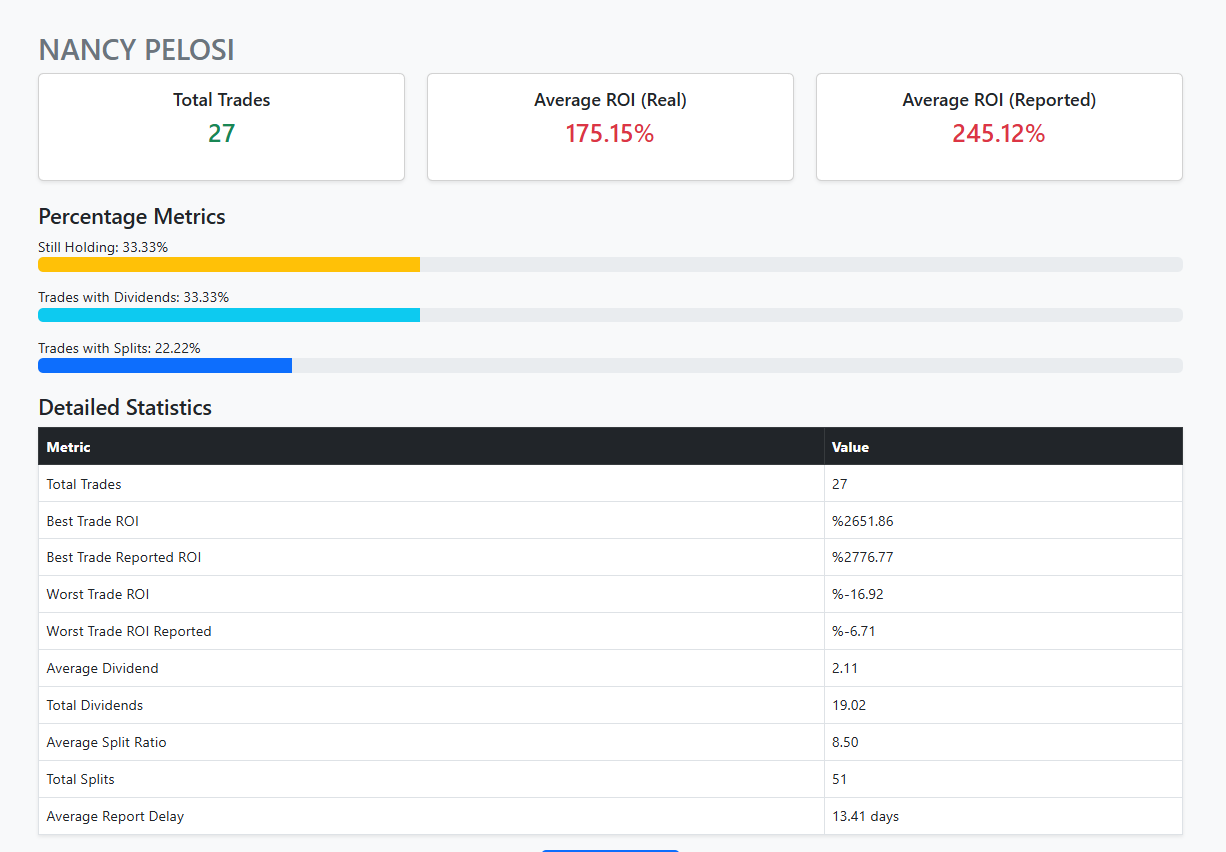

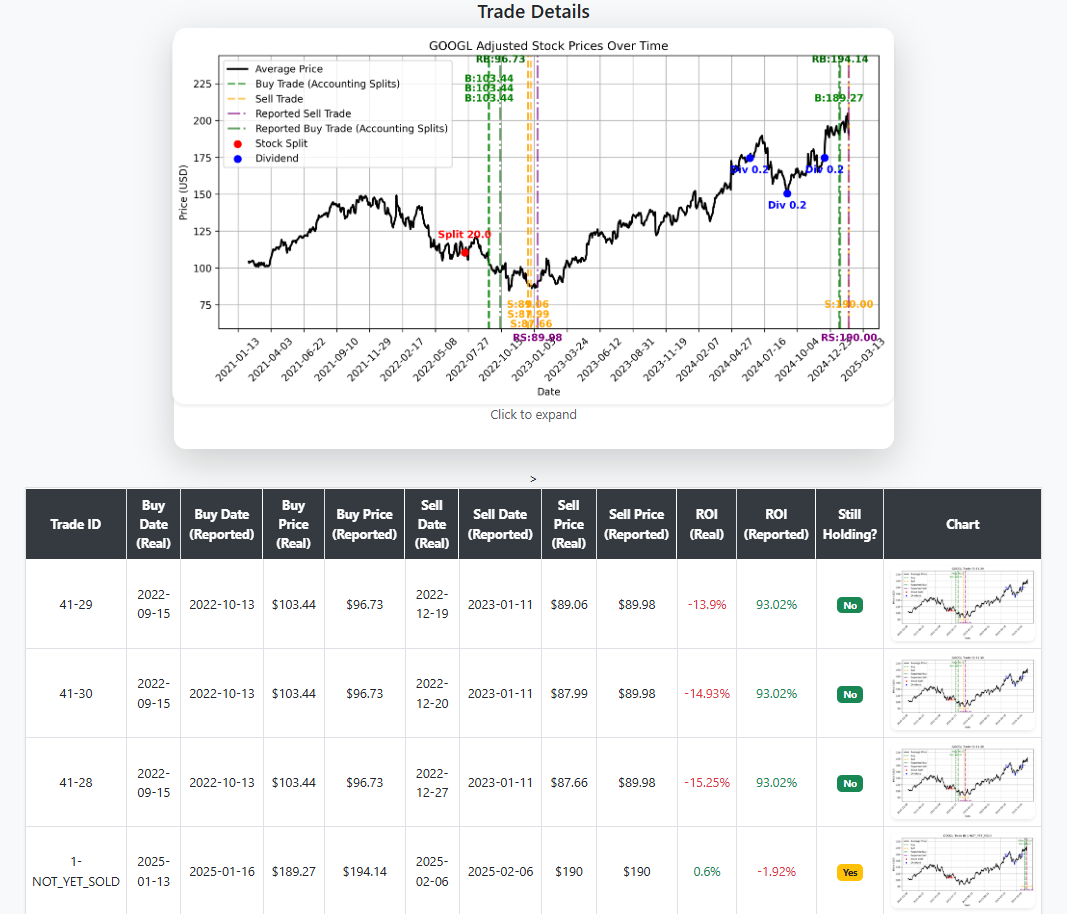

Insider Trading Bot

Have you ever looked at the stock market and was astounded by the insane amount of growth and potential return on investment that lay there untapped? I did. Instead of building an algorithm that would predict the future, I decided to just copy the smart kid's homework.

I built a bot that would gather politician's trading data, fill in the missing data via public stock market data, chart it, and produce a report. The bot would then analyze the data and make a recommendation on what stocks to buy.